From 3D model to simulated measurement data: Training networks in virtual space

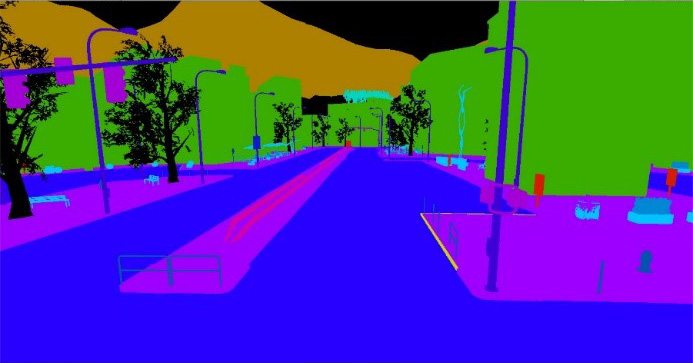

The basis of synthetic training data is a three-dimensional data set that forms a virtual representation of the environment, e.g. a typical city scene (Fig. 1). On the one hand, a photorealistic representation can be generated from this specially created digital 3D model world (Fig. 2), which simulates a real camera image and virtually simulates the parameters of real existing measuring devices. On the other hand, a fully automated segmentation of the 3D model can be generated in which an object class is assigned to each pixel of the camera image (Fig. 3). Since a scene is composed of previously defined and therefore known objects, the segmentation of the data is inherently given. The previous manual assignment is therefore obsolete. Both the measurement data, for example camera images, and segmented images - the training data - can therefore be created from a 3D model with 100% accuracy, whereas the manual process is always subject to errors. The synthetic creation of training data is therefore not only more efficient, but also more accurate than the manual data annotation. In addition, the training data can be generated from different, freely selectable perspectives in space. Various applications with different recording perspectives – for example from the air, from the street or from the sidewalk – can therefore be fed from one data set.

A deep understanding of the process chain is key

When rendering the respective scene, we use our comprehensive expertise in the areas of modeling and measurement technology: in addition to the pure 3D geometry, the material properties of the surfaces, the lighting situation, weather conditions and, depending on the application, other properties also play a role. Dynamic properties such as the movement of objects in the 3D scene can also play a role. For reliable and efficient data evaluation, the measurement system used in the final application must also be taken into account. In the end, realistic, virtual measurement data for various measuring devices should be generated from the 3D models with all the additional information.

Measurement data generated with mobile mapping systems is primarily available as 3D point clouds, 2D camera images or 360° panoramic images. The measurement data is generated using laser scanners and cameras. When modeling, it is important to emulate the device-specific characteristics: How does the measuring system interact with the environment? To do this, it is necessary to algorithmically simulate both the interaction of the light pulses with the respective surface material (sunlight, ambient light, artificial lighting, laser beam) and the processes in the respective measuring device (scanner, camera) in sufficient depth.

For maximum efficiency in the development and application of software for the training of ANNs, a careful balance must be struck between the most physically accurate simulation possible and a sufficient heuristic-based approximation of the respective phenomena. This requires extensive knowledge of the respective recording process and the state of the art in algorithmic simulation of such processes. Fraunhofer IPM holds a library of algorithms that allow a variety of 3D scenes with different properties to be generated from a modular system of generic 3D model components through parameterization.